Signalgate and the Enduring Relevance of OPSEC in 2025

The following is a post by Mark Barwinski, the opening keynote speaker for Swiss Cyber Storm 2025. Mark has over 20 years of cybersecurity leadership experience spanning financial services, professional consulting, manufacturing, and government intelligence. In this blog post, he shares first-hand experiences of OPSEC failures from his time at the NSA, and offers strong advice for realistic OPSEC practices in 2025. By publishing this article for the first time, Swiss Cyber Storm hopes to provide a basis for further discussion.

For more info about Mark Barwinski: https://www.linkedin.com/in/markbarwinski/

Executive Summary

In March 2025, senior U.S. national security officials exposed classified military strike details against Houthi targets through improper use of the Signal messaging app—an event now widely referred to as “Signalgate.” This significant operational security (OPSEC) failure highlights crucial vulnerabilities not only within government communication practices but can also inform corporate security protocols. This paper examines the Signalgate incident, extracts critical lessons from historical OPSEC successes and failures, analyzes Signal’s limitations, and emphasizes actionable strategies for corporate leaders.

Effective security relies fundamentally on the consistent application of OPSEC principles, cultural awareness, robust communication protocols, and exemplary leadership behaviors. Technological advancements alone cannot safeguard sensitive information. By implementing structured, disciplined communication practices and fostering a culture of security awareness, corporate leaders can significantly mitigate risks from espionage, leaks, and cyber threats—protecting their competitive advantage and, crucially, ensuring the safety of their personnel.

Introduction: The Breach at the Highest Levels

On March 24, 2025, a significant breach in U.S. national security occurred when top officials mistakenly included a journalist from The Atlantic in a Signal messaging group titled “Houthi PC Small Group Chat.” The group, comprising America’s most senior national security leaders—including the National Security Adviser, Secretary of Defense, Vice President, CIA Director, and others—shared detailed classified information about an imminent military operation against Houthi targets. These communications revealed sensitive operational details, notably involving Israeli intelligence assets providing real-time confirmations before and after the military strike.

The consequences were serious, exposing not only critical intelligence methods but also systemic flaws in operational security at the highest levels. Similar security failures take place on the private sector too, where sensitive discussions frequently occur through inadequately secured communication channels. The parallels between governmental and corporate OPSEC failures can be reduced not to technical short comes – although they play a factor – but rather in the laxed attitudes and failure to practice fundamental security concepts.This paper explores the Signalgate incident’s broader implications, highlighting lessons that corporate entities can apply to prevent similar operational security failures. By integrating common sense OPSEC principles into daily practices, organizations can protect sensitive information, maintain operational integrity, and safeguard their competitive positions against increasingly sophisticated threats, and ensure the safety of their employees in risky geopolitical environments.

Signalgate Incident Analysis: A Cascading Security Catastrophe

The Signalgate incident reveals a systemic collapse of operational security at the highest levels of American government, going far beyond an isolated mistake. The cascade began when National Security Advisor Mike Waltz mistakenly added The Atlantic’s editor-in-chief, Jeffrey Goldberg, to the Signal group, believing he was communicating with another government official. This critical initial lapse in verifying group membership quickly escalated into a series of alarming disclosures.

Secretary of Defense Pete Hegseth subsequently shared detailed operational plans approximately two hours prior to a scheduled military strike against Houthi targets. His messages explicitly detailed the deployment of F-18 fighter jets launching at precisely 12:15 pm ET and a second-strike wave at 2:10 pm ET, the launch of MQ-9 strike drones, and sea-based Tomahawk cruise missiles commencing strikes at 3:36 pm ET. Such precise operational specifics provided adversaries ample opportunity to alert targeted individuals or deploy effective countermeasures. Yet, Secretary Hegseth insists all information is not sufficiently sensitive to rise to the level of classified information.

Vice President Vance, requesting operational confirmation, prompted National Security Advisor Waltz to further compound the disclosure by confirming that the U.S. had “visual identification” of the primary target, identified as a leading missile strategist entering his girlfriend’s building—which subsequently collapsed from the strike. This explicit disclosure jeopardized Israeli human intelligence (HUMINT) assets, potentially compromising their lives and years of meticulous intelligence-gathering efforts.

Further troubling was the style of communication observed among these senior officials, characterized by unnecessary mutual reinforcement, personal praise, and informal congratulatory exchanges as if immature children seeking acceptance. Such behavior sharply contrasts the disciplined, concise, and highly professional communication typically expected at the highest strategic levels and witnessed by me during 12 years of closely collaborating in mixed military and civilian environments across three continents, including one year in Afghanistan. Experienced military commanders know collateral damage is highly likely and American lives can also be potentially at risk during such missions. Perhaps due to this, a recognition that it is not “War-by-Chat” on Signal, that my experiences working with these leaders differs from what we witnessed in this exchange. In this instance, the World witnessed the complete callousness of this leadership towards human cost. Instead, they seemed more concerned with the adequate sprinkling of appropriate emojis throughout their padding of each other’s back.

Lastly, special envoy Steve Witkoff’s participation from Moscow—an epicenter of sophisticated signals intelligence and espionage operations—significantly increased the risk exposure. This risk was intensified by the heightened geopolitical tensions marked by sabotage operations and assassination plots across Europe as we have experienced over the past several years. Just days following Signalgate, The New York Times published “The Secret History of the War in Ukraine,” outlining extensive U.S. intelligence support directly resulting in significant Russian military losses, including several generals, during this multi-year conflict. It is therefore reasonable to conclude given the opportunity, Russia likely would exploit the compromised U.S. operational details as an opportunity for retaliation, potentially placing American pilots in severe jeopardy or allowing the Houthi leader to escape.Ultimately, Signalgate demonstrates a breakdown in OPSEC discipline, highlighting critical vulnerabilities in both communication practices and operational culture at the highest governmental levels. Failing to follow some fundamental practices can result in catastrophic real-world consequences.

Personal Insights: Operational Lessons Learned

I joined the National Security Agency as an intern in 2004, performing protocol reverse engineering and seeking to identify potentially sensitive or classified information in everyday Department of Defense (DoD) network traffic. By 2008, I assumed the role of Chief of the Joint Communications Security Monitoring Activity (JCMA) Europe (a.k.a. CIRCUITKEG). This experience offers me an invaluable insight and opinion into the systemic vulnerabilities exposed by the Signalgate incident. At JCMA, our mission was uniquely focused—monitoring our own communications through the eyes of adversaries, identifying critical vulnerabilities in seemingly secure systems, and rigorously enforcing operational security (OPSEC) practices.

One key lesson consistently reinforced during my tenure was that most security breaches do not arise from sophisticated technical exploits but rather from basic failures of discipline, awareness, and operational culture. At JCMA Europe, seemingly innocuous details like aircraft parking patterns or travel itineraries, casually shared through unsecured channels, consistently posed significant security risks.

Operating from our windowless secure facility, we maintained constant vigilance—our screens flickering 24/7 with communication intercepts, our analysts hunting for fragments of sensitive information appearing where they shouldn’t. Unlike conventional security that builds walls, we looked for what seeped through the cracks.

In one such example of innocuous information having potentially catastrophic consequences, vehicle parking patterns could reveal to an adversary the most appropriate attack windows against VIP delegations. During a VIP European visit, my team detected a disturbing pattern in the logistics communications and patterns. Through careful analysis of ground transportation arrangements, and aircraft movements as well as parking configurations on the tarmac, we could pinpoint precisely if this most senior of senior U.S. representatives was present in the aircraft. The truly alarming realization came when we confirmed these same patterns were visible to anyone with binoculars from a freeway near the airfield. Guidance was issued for executive protection teams to thwart potential adversarial attacks by changing these telltale practices.

In another instance, we intercepted unencrypted emails/faxes containing detailed travel itineraries for the NSA and DIA directors’ upcoming visit to Kabul, Afghanistan. The messages, sent through unclassified channels, included specific routes through Kabul and exact arrival times at multiple locations—essentially a targeting package for anyone seeking to attack the motorcade. My team worked through the night, coordinating security details to implement completely new routes and timing.

These operational security gaps extend beyond obvious breaches. Intelligence professionals have long recognized how seemingly innocuous patterns reveal sensitive operations. The oft-cited “pizza box intelligence” phenomenon—where increased late-night food deliveries to specific Pentagon sections coupled with late night office lights can be correlated with operational surges— This demonstrates how indirect indicators can reveal classified activities, and how adversaries’ piece together intelligence from unexpected sources thus anticipating upcoming U.S. military operations.

The vulnerabilities I confronted at JCMA Europe weren’t confined to government operations. Corporate executives traveling through our region faced similar risks—their movements, meeting patterns, and communications potentially revealing negotiation strategies, merger plans, or product launches to competitors and foreign intelligence services alike. The same methodologies we developed to protect military operations now provide the foundation for corporate security in an increasingly hostile business intelligence landscape.

Historical OPSEC Lessons: Successes and Failures with Lasting Relevance

The Signalgate incident is not an outlier—it’s the latest in a long continuum of operational security breaches. Understanding historical OPSEC successes and failures offers insights for both government leaders and corporate decision-makers operating in increasingly adversarial digital environments.

OPSEC Successes: Gaining Strategic Advantage through Control of Information

Operation Bodyguard (World War II):

The D-Day deception campaign remains a landmark in OPSEC excellence. Through an elaborate mix of double agents, fake radio traffic, decoy equipment, and false intelligence, Allied forces convinced the German high command that the invasion would occur at Pas-de-Calais instead of Normandy. This misdirection drastically reduced German defenses at the real landing sites and saved thousands of lives. For businesses, this serves as a clear demonstration of the competitive advantage that controlled narrative and strategic misinformation can provide—especially during high-stakes negotiations, market launches, or legal disputes.

Operation Neptune Spear (2011):

The raid to eliminate Osama bin Laden exemplified disciplined information compartmentalization. Planning was tightly held within a select circle, and even senior cabinet officials were kept in the dark until the final phase. The result was total tactical surprise and mission success under extraordinary geopolitical pressure. For corporate leadership, this underscores the importance of access controls, especially in M&A transactions, product development, or strategic pivots where a leak could destroy leverage or first-mover advantage.

CIA Abbottabad Cover Operation:

In preparation for the bin Laden raid, CIA operatives used a fake vaccination campaign to collect DNA samples. This ingenious tactic allowed operatives to confirm bin Laden’s presence without compromising the larger operation. The takeaway: even in constrained environments, creative cover mechanisms can support secure intelligence collection. In corporate terms, this is akin to using benign pretexts—like routine audits or supplier vetting—to quietly gather insight without revealing strategic intent.

These three examples demonstrate the resounding success which can be achieved when information is controlled and managed as a resource. Determining what you share, when you share it, to who you share it, and how you share it, can have significant advantages.

OPSEC Failures: When Lapses Become Liabilities

USS Cole Bombing (2000):

A crew member’s seemingly harmless email about an upcoming port call in Yemen was later posted online. Terrorists used this information to time an attack, killing 17 U.S. sailors. This tragic event illustrates how even low-level disclosures can have lethal consequences. In today’s business world, similar risks exist when employees post about travel, client visits, or sensitive projects on social media. A single careless message can tip off competitors or hostile actors to strategic moves.

Russia in Ukraine (2014):

Russian military involvement in Ukraine was exposed not by spies or satellites, but by Instagram posts. Soldiers’ selfies, complete with geotags and unit identifiers, contradicted the Kremlin’s public denials. This modern OPSEC failure shows how metadata—often invisible to users—can be weaponized. Corporate teams need to be equally cautious with geotagged photos, Slack discussions, and cloud-shared files, which can reveal patterns, locations, and affiliations to competitors or hostile entities.

Technologies evolve, but the foundational principles of OPSEC remain constant. Control over who knows what, when, and how remains the backbone of operational integrity—whether it’s a military campaign or a corporate initiative. Leaders who underestimate the importance of information discipline—especially in a world saturated with sensors, metadata, and digital exhaust—invite unnecessary risk.

Relevance of OPSEC in Corporate Environments Today

Why OPSEC Matters for Businesses Now More than Ever

Corporate leaders in 2025 face threats rivaling those once exclusive to nation-states. Private enterprises are targeted by states, criminal syndicates, and espionage units not just for IP and profit, but for geopolitical advantage. Key industries like energy, aerospace, banking, pharmaceuticals, and advanced tech are now prime targets, not peripheral actors.

The threat landscape has expanded exponentially with connected devices creating innumerable vulnerability points. Adversaries deploy precise persistent threats while AI transforms data analysis capabilities. Corporate targets face sophisticated social engineering based on detailed research and psychological profiles.

Organizations now exist within a global intelligence ecosystem where previously overlooked communications—contract talks, M&A planning, product roadmaps, infrastructure plans—are actively surveilled. Effective corporate OPSEC extends beyond technical safeguards to human behavior, device usage, and communication protocols.

The Signalgate incident exemplifies what businesses unknowingly risk daily: unsecured channels, unverified participants, and casual sharing of critical information—often with little awareness of consequences.

Swiss Government Case Study: Bern’s Use of WhatsApp and Threema

When the Signalgate story broke in the United States, it naturally prompted reflection here in Switzerland. “Could this happen here?”—I believe current practices make it easy for foreign intelligence services. Officially, we pride ourselves on stricter communication protocols: the Federal Council uses Threema Work, ministers receive encrypted secondary phones, and personal devices are excluded from sensitive sessions. But those of us with the luxury of a multi-decade perspective can attest policy and practice don’t always align. Human factors—habit, convenience, and assumption—remain our biggest vulnerability. This was unfortunately demonstrated by former president of the Swiss Confederation (2024) Viola Amherd, when she admitted during an interview with Swiss Public Television (SRF) in January 2024 that, although provided with an encrypted telephone, she chooses to use her iPhone instead. While in-person or meeting discussions are a solid approach towards the discussion of sensitive topics, failing to adhere to device segregation practices unnecessarily exposes the country’s top leadership to intercept. Additionally, a trail of breadcrumbs and clues leaking throughout business-as-usual conversations may tip up adversaries significant and valuable intelligence. As with the previous pizza-box example, we may not realize trivial and innocuous activities or conversations can amount to much more.

The truth is, even within Switzerland’s layered federalism, we see inconsistency. While Bern city has adopted Threema, the cantonal executive council still coordinates on WhatsApp. The local culture often leans toward pragmatism: if WhatsApp works, why change it? Yet that very logic is what makes us vulnerable. Encryption alone cannot compensate for weak verification, unclear boundaries between personal and professional use, or relaxed attitudes toward access control. We might not have had a “Signalgate” moment yet, but the conditions for one exist here as well—particularly when decisions are rushed, and trust is assumed rather than confirmed.

What we learned in 2025 is that technology doesn’t enforce discipline—people do. And no system, no matter how Swiss, is immune to lapses if the people using it treat protocol as optional. For Swiss institutions and companies alike, the challenge is not to adopt more apps—it’s to embed security awareness in everyday decisions. We have the tools. Now we need to reinforce the mindset.

Corporate Parallel: WhatsApp Misuse in a Sensitive Business Setting

In early 2025, I witnessed an OPSEC breakdown during a client exchange on WhatsApp. Added to a group chat with fourteen participants—including general managers from a major international firm and project managers from both sides—I watched as discussion quickly turned to implementation timelines, challenges, and sensitive project details.

What was alarming: three unidentified phone numbers participated without anyone questioning their presence. The implicit assumption—if they were added, they belonged—mirrored exactly the failure underlying Signalgate: blind trust in digital group membership without verification.

The timing was striking, as Signalgate had just broken in the news that week. Fortunately, minimal persuasion was needed to shut down the WhatsApp group and redirect communication to secured corporate email, where identities could be verified and proper controls maintained.

Communication Security Technologies and Limitations

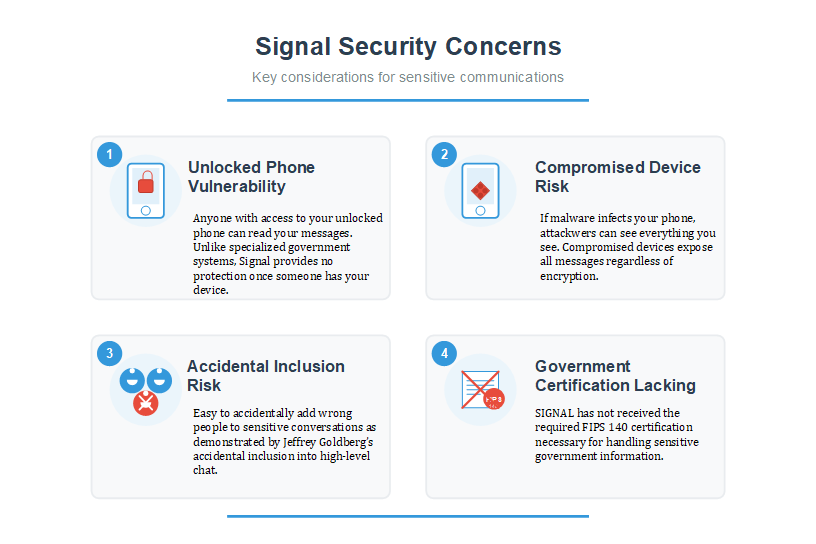

Signal’s Role in Signalgate: The Limits of Encryption

Signal is widely trusted for its end-to-end encryption, but the Signalgate breach highlighted its serious limitations for sensitive communication. Messages are only protected in transit—once they reach a device, they are decrypted and fully exposed to anyone with access. If a device is compromised by malware or left unlocked, encryption offers no defense.

Signal also lacks critical safeguards: there’s no robust identity verification for group members, no access controls, and no certification for handling classified data (such as FIPS 140). In Signalgate, these weaknesses enabled the accidental inclusion of a journalist in a high-level national security thread, allowing real-time intelligence to be leaked, however, the data could have been exfiltrated, had the personal devices been compromised by foreign intelligence services. We don’t yet know if this was the case, but we know, as reported by Vice President Vance himself, that his personal device was compromised in October of 2024 by Chinese intelligence agencies.

The core issue wasn’t a failure of technology, but of operational discipline. Tools like Signal were never meant for command-and-control or strategic coordination. When personal devices and casual apps are used for critical communication, true vulnerability isn’t the platform—it’s the people using it without policy, process, or accountability.

Sectera, VIPER, and the Gap Between Civilian and Government Comms

Government-classified communication systems like NSA’s STU-III, General Dynamics’ Sectera Edge, or VIPER phones are built with OPSEC as their foundation. These devices—used by senior U.S. and allied officials—feature hardened hardware, tamper-proof operating systems, multi-factor authentication, remote wiping capabilities, and classified-level encryption. Physically separated from personal channels, they require distinct credentials, hardware tokens, or biometric access. Their design premise is clear: don’t trust users to self-protect; enforce protection systematically.

Yet these tools have drawbacks. They’re expensive, cumbersome, and unpopular with non-technical users, unable to match the intuitive appeal of iPhones—explaining Viola Amherd’s preference. Officials often resort to Signal or WhatsApp precisely because hardened devices are less intuitive and slower. This convenience gap encourages risky workarounds, especially under pressure. Similarly, in corporate environments, secure collaboration platforms frequently lose to expedient solutions like Dropbox, Gmail, or Slack because official tools seem excessively rigid or complex.

Security needn’t sacrifice usability—it should accommodate actual work patterns. Government-grade tools demonstrate that information integrity can coexist with practical functionality. President Obama’s BlackBerry illustrates this balance. Initially deemed too insecure, NSA engineers extensively modified it with hardware and software enhancements, transforming a consumer device into a hardened communication tool with encrypted messaging, vulnerable feature removal, and a closed ecosystem filtering communications through a vetted whitelist—creating a secure enclave within an insecure platform.

Despite highly restricted access—limited to a small, pre-approved group with layered controls—it functioned effectively because leadership demanded it. Obama’s BlackBerry exemplified security enforcement that maintained speed, responsiveness, and usability.

Practical OPSEC Recommendations for Business

Organizations that treat OPSEC as a leadership function, not an IT function, will be best positioned to operate with confidence and control.

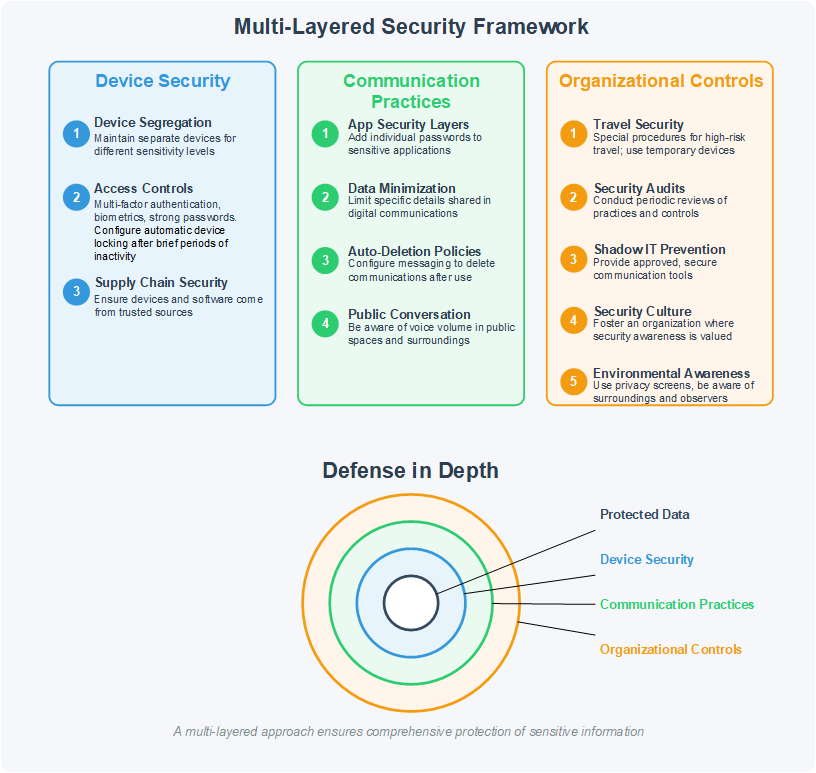

For today’s corporate leaders, you likely would not have access to NSA hardened devices, nor may you have access to Sectera or Viper encrypted phones. However, the following approach will facilitate stronger and more secure communications while on the go:

Device Security

- Device Segregation Strategy – Maintain separate devices for different sensitivity levels of information. Consider having dedicated devices for your most sensitive communications that never leave corporate controls maintaining the same protection factor as your managed infrastructure.

- Comprehensive Access Controls – Implement multi-factor authentication, biometric verification, and strong passwords on all devices. Configure automatic device locking after brief periods of inactivity.

- Supply Chain Security – Ensure that devices and software come from trusted sources and haven’t been compromised before reaching your organization.

Communication Practices

- Application Security Layers – Add individual application passwords to sensitive apps, creating multiple security layers an attacker would need to penetrate.

- Data Minimization Practices – Limit the specific details shared in digital communications. Ask whether exact times, locations, names, or numbers are truly necessary in each message.

- Auto-Deletion Policies – Configure messaging platforms to automatically delete sensitive communications after they’ve served their purpose, reducing the persistent attack surface.

- Public Conversation Discipline – Be acutely aware of how far your voice carries in public spaces like trains, restaurants, and airport lounges. Sensitive conversations overheard in public have compromised countless operations and business deals.

Organizational Controls

- Travel Security Protocols – Implement special procedures for international travel, especially to high-risk countries. Consider using temporary “burner” devices when traveling to locations with sophisticated surveillance capabilities.

- Regular Security Audits – Conduct periodic reviews of communication practices and security controls to identify and address emerging vulnerabilities.

- Shadow IT Prevention – Provide approved, secure communication tools that meet user needs to prevent employees from turning to unauthorized applications.

- Security Culture Development – Foster an organizational culture where security awareness is valued, and proper communications practices are consistently followed.

- Environmental Security Awareness – Use privacy screens to prevent visual eavesdropping, be conscious of conversation volume in public spaces, and maintain awareness of who might be observing your activities.

The growing sophistication of surveillance capabilities means corporate leaders must recognize that they too may be targeted by advanced persistent threats, particularly when traveling internationally or working in strategic industries. The case of Saudi dissident Jamal Khashoggi, tracked through compromised mobile devices before his murder, demonstrates the extreme risks posed by device exploitation.

Conclusion

Signalgate should not be viewed as an isolated government communications incident. The same operational gaps, informal communication habits, and misplaced reliance on consumer-grade apps exist across boardrooms and executive suites worldwide. The difference is only one of visibility—government failures make headlines; corporate ones often remain hidden until it’s too late.

At its core, OPSEC is not a technical feature or a compliance checkbox. It is a mindset. The tools matter, yes—but without disciplined leadership, clear policies, and a culture that understands why information protection matters, those tools will fail. Businesses that want to thrive in a contested, surveilled, and competitive world must approach communication security with the same seriousness as financial controls or legal risk.The future belongs to those who treat information as a strategic asset—and who protect it accordingly. In 2025 and beyond, OPSEC must be elevated to the C-suite, embedded in day-to-day operations, and championed as a core leadership responsibility.

all rights reserved- @markbarwinski